Migrate data in MongoDB by KubeBlocks

Before you start

Enable kbcli migration

-

Install KubeBlocks: You can install KubeBlocks by kbcli or by Helm.

-

kbcli addon list

kbcli addon enable migration

Configure the source

Modify the configuration of the source to support CDC.

- Replication cluster instance: no scaling is required. You need to provide the address of the primary for migration.

- Standalone instance: it is required to scale a Standalone to a Replica Set with one node to use CDC. Refer to Convert a Standalone to a Replica Set for details.

Check the account permission

Make sure both the source and sink account meet the following permissions.

- The source account: The read permission of the repository to be migrated, admin, and local.

- The sink account: The read and write permission of the repository to be migrated, and the read permission of admin and local.

Prepare data sampling

It is recommended to prepare data sampling for verification after the migration to ensure correctness.

Migrate data

Steps

-

Create a migration task.

kbcli migration create mytask --template apecloud-mongo2mongo \

--source user:123456@127.0.0.1:5432/db_test \

--sink user:123456@127.0.0.2:5432/db_test \

--migration-object '"public.table_test_1","public.table_test_2"'📎 Table 1. Options explanation

Option Descriprion mystask The name of the migration task. You can customize it. --templateIt specifies the migration template. --template apecloud-mongo2mongomeans that this migration task uses the template of migrating from MongoDB to MongoDB created by KubeBlocks. Runkbcli migration templatesto view all available templates and the supported database information.--sourceIt specifies the source. user:123456@127.0.0.1:5432/db_testin the above example follows the format${user_name}:${password}@${database connection url}/${database}. For this guide, the connection URL uses the public network address.--sinkIt specifies the destination. user:123456@127.0.0.2:5432/db_testin the above example follows the format${user_name}:${password}@${database connection url}/${database}. For this guide, the connection URL uses the service address inside the Kubernetes cluster.--migration-objectIt specifies the migration object. The above example describes data in "public.table_test_1" and "public.table_test_2", including structure data, stock data, and incremental data generated during running migration task, will be migrated to the sink. -

(Optional) Specify migration steps by the flag

--steps.The default steps follow the order precheck -> structure initialization -> data initialization -> incremental migration. You can use

--stepsto specify migration steps. For example, perform tasks in the order of precheck -> data initialization -> incremental migration.kbcli migration create mytask --template apecloud-mysql2mysql \

--source user:123456@127.0.0.1:5432/db_test \

--sink user:123456@127.0.0.2:5432/db_test \

--migration-object '"public.table_test_1","public.table_test_2"'

--steps precheck=true,init-struct=false,init-data=true,cdc=true -

View the task status.

# View the migration task list

kbcli migration list

# View the details of a specified task

kbcli migration describe ${migration-task-name}Pay attention to Initialization, CDC, and CDC Metrics.

-

Initialization

- Precheck: If the status shows

Failed, it means the initialization precheck does not pass. Troubleshoot the initialization by the following examples in troubleshooting. - Init-struct: Structure initialization. Idempotent processing logic is adopted. A failure occurs only when a severe problem occurs, such as failing to connect a database.

- Init-data: Data initialization. If there is a large amount of stock data, it takes a long time to perform this step and you should pay attention to Status.

- Precheck: If the status shows

-

CDC: Incremental migration. Based on the timestamp recorded by the system before the init-data step, the system starts data migration following eventual consistency and performs capturing the source library WAL (Write Ahead Log) changes -> writing to the sink. Under normal circumstances, the CDC phase continues if the migration link is not actively terminated.

-

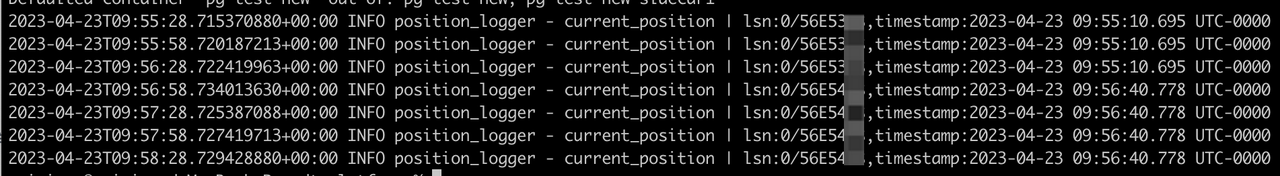

CDC Metrics: Incremental migration indicators. Currently, the indicators mainly provide the WAL LSN (Log Sequencer Number) of the source library and the corresponding timestamp (note that the timestamp shows the local time zone of the Pod Container runtime) when the CDC process has completed "capturing -> writing" process.

noteThe CDC Metrics are updated every 10 minutes by the system, i.e. if there exists continuous data writing into the source, metrics.timestamp here delays 10 minutes compared with the current time.

-

-

Validate the migration with the prepared data sampling.

Troubleshooting

If any step above fails, run the command below to troubleshoot the failure.

# --step: Specify the step. Allowed values: precheck,init-struct,init-data,cdc

kbcli migration logs ${migration-task-name} --step ${step-name}

Switch applications

Before you start

- Make sure the KubeBlocks migration task runs normally.

- To differentiate the dialogue information and to improve data security, it is recommended to create and authorize another account dedicated to data migration.

- For safety concerns, it is necessary to stop the business write and switch the application during off-peak hours.

- Before switching the application, it is recommended to prepare data sampling for verification after switching to ensure correctness.

Steps

-

Check the migration task status and ensure the task is performed normally.

-

Describe the migration task details and all steps in Initialization are

Completeand CDC isRunning.kbcli migration describe ${migration-task-name} -

Under the prerequisite that there exists continuous write into the source, observe whether the timestamp is still in progress and whether there is almost no delay. For example,

kbcli migration logs ${migration-task-name} --step cdc | grep current_positionThe results update every 10 seconds.

-

-

Pause the business and stop new business data from being written into the source.

-

View the migration status again and ensure the migration task runs normally, lasting at least one minute.

Refer to the operations in step 1 and observe whether the link is normal and the timestamp meets the expectation.

-

Use the sink to restore the business.

-

Validate the switch with the prepared data sampling.

Clean up the environment

After the migration task is completed, you can terminate the migration task and function.

Terminate the migration task

Deleting the migration task does not affect the data in the source and sink.

kbcli migration terminate ${migration-task-name}

Terminate kbcli migration

-

Check whether there are running migration tasks.

kbcli migration list -

Disable the migration add-on.

kbcli addon disable migration -

Delete the Kubernetes CRD (Custom Resource Definition) manually.

kubectl delete crd migrationtasks.datamigration.apecloud.io migrationtemplates.datamigration.apecloud.io serialjobs.common.apecloud.io