Operations

Backup And Restores

Monitoring

tpl

This guide demonstrates how to configure comprehensive monitoring for Qdrant clusters in KubeBlocks using:

Before proceeding, ensure the following:

kubectl create ns demo

namespace/demo created

Deploy the kube-prometheus-stack using Helm:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm install prometheus prometheus-community/kube-prometheus-stack \

-n monitoring \

--create-namespace

Check all components are running:

kubectl get pods -n monitoring

Expected Output:

NAME READY STATUS RESTARTS AGE

alertmanager-prometheus-kube-prometheus-alertmanager-0 2/2 Running 0 114s

prometheus-grafana-75bb7d6986-9zfkx 3/3 Running 0 2m

prometheus-kube-prometheus-operator-7986c9475-wkvlk 1/1 Running 0 2m

prometheus-kube-state-metrics-645c667b6-2s4qx 1/1 Running 0 2m

prometheus-prometheus-kube-prometheus-prometheus-0 2/2 Running 0 114s

prometheus-prometheus-node-exporter-47kf6 1/1 Running 0 2m1s

prometheus-prometheus-node-exporter-6ntsl 1/1 Running 0 2m1s

prometheus-prometheus-node-exporter-gvtxs 1/1 Running 0 2m1s

prometheus-prometheus-node-exporter-jmxg8 1/1 Running 0 2m1s

KubeBlocks uses a declarative approach for managing Qdrant Clusters. Below is an example configuration for deploying a Qdrant Cluster with 3 replicas.

Apply the following YAML configuration to deploy the cluster:

apiVersion: apps.kubeblocks.io/v1

kind: Cluster

metadata:

name: qdrant-cluster

namespace: demo

spec:

terminationPolicy: Delete

clusterDef: qdrant

topology: cluster

componentSpecs:

- name: qdrant

serviceVersion: 1.10.0

replicas: 3

resources:

limits:

cpu: "0.5"

memory: "0.5Gi"

requests:

cpu: "0.5"

memory: "0.5Gi"

volumeClaimTemplates:

- name: data

spec:

storageClassName: ""

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

Monitor the cluster status until it transitions to the Running state:

kubectl get cluster qdrant-cluster -n demo -w

Expected Output:

kubectl get cluster qdrant-cluster -n demo

NAME CLUSTER-DEFINITION TERMINATION-POLICY STATUS AGE

qdrant-cluster qdrant Delete Creating 49s

qdrant-cluster qdrant Delete Running 62s

Check the pod status and roles:

kubectl get pods -l app.kubernetes.io/instance=qdrant-cluster -n demo

Expected Output:

NAME READY STATUS RESTARTS AGE

qdrant-cluster-qdrant-0 2/2 Running 0 1m43s

qdrant-cluster-qdrant-1 2/2 Running 0 1m28s

qdrant-cluster-qdrant-2 2/2 Running 0 1m14s

Once the cluster status becomes Running, your Qdrant cluster is ready for use.

If you are creating the cluster for the very first time, it may take some time to pull images before running.

kubectl -n demo exec -it pods/qdrant-cluster-qdrant-0 -c kbagent -- \

curl -s http://127.0.0.1:6333/metrics | head -n 50

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: qdrant-cluster-pod-monitor

namespace: demo

labels: # Must match the setting in 'prometheus.spec.podMonitorSelector'

release: prometheus

spec:

jobLabel: app.kubernetes.io/managed-by

# defines the labels which are transferred from the

# associated Kubernetes 'Pod' object onto the ingested metrics

# set the lables w.r.t you own needs

podTargetLabels:

- app.kubernetes.io/instance

- app.kubernetes.io/managed-by

- apps.kubeblocks.io/component-name

- apps.kubeblocks.io/pod-name

podMetricsEndpoints:

- path: /metrics

port: tcp-qdrant # Must match exporter port name

scheme: http

namespaceSelector:

matchNames:

- demo # Target namespace

selector:

matchLabels:

app.kubernetes.io/instance: qdrant-cluster

PodMonitor Configuration Guide

| Parameter | Required | Description |

|---|---|---|

port | Yes | Must match exporter port name ('http-metrics') |

namespaceSelector | Yes | Targets namespace where Qdrant runs |

labels | Yes | Must match Prometheus's podMonitorSelector |

path | No | Metrics endpoint path (default: /metrics) |

interval | No | Scraping interval (default: 30s) |

Forward and access Prometheus UI:

kubectl port-forward svc/prometheus-kube-prometheus-prometheus -n monitoring 9090:9090

Open your browser and navigate to: http://localhost:9090/targets

Check if there is a scrape job corresponding to the PodMonitor (the job name is 'demo/qdrant-cluster-pod-monitor').

Expected State:

podTargetLabels (e.g., 'app_kubernetes_io_instance').Verify metrics are being scraped:

curl -sG "http://localhost:9090/api/v1/query" --data-urlencode 'query=up{app_kubernetes_io_instance="qdrant-cluster"}' | jq

Example Output:

{

"status": "success",

"data": {

"resultType": "vector",

"result": [

{

"metric": {

"__name__": "up",

"app_kubernetes_io_instance": "qdrant-cluster",

"app_kubernetes_io_managed_by": "kubeblocks",

"apps_kubeblocks_io_component_name": "qdrant",

"apps_kubeblocks_io_pod_name": "qdrant-cluster-qdrant-3",

"container": "qdrant",

"endpoint": "tcp-qdrant",

"instance": "10.244.0.64:6333",

"job": "kubeblocks",

"namespace": "demo",

"pod": "qdrant-cluster-qdrant-3"

},

"value": [

1747583924.040,

"1"

]

},

{

"metric": {

"__name__": "up",

"app_kubernetes_io_instance": "qdrant-cluster",

"app_kubernetes_io_managed_by": "kubeblocks",

"apps_kubeblocks_io_component_name": "qdrant",

"apps_kubeblocks_io_pod_name": "qdrant-cluster-qdrant-0",

"container": "qdrant",

"endpoint": "tcp-qdrant",

"instance": "10.244.0.62:6333",

"job": "kubeblocks",

"namespace": "demo",

"pod": "qdrant-cluster-qdrant-0"

},

"value": [

1747583924.040,

"1"

]

},

{

"metric": {

"__name__": "up",

"app_kubernetes_io_instance": "qdrant-cluster",

"app_kubernetes_io_managed_by": "kubeblocks",

"apps_kubeblocks_io_component_name": "qdrant",

"apps_kubeblocks_io_pod_name": "qdrant-cluster-qdrant-2",

"container": "qdrant",

"endpoint": "tcp-qdrant",

"instance": "10.244.0.60:6333",

"job": "kubeblocks",

"namespace": "demo",

"pod": "qdrant-cluster-qdrant-2"

},

"value": [

1747583924.040,

"1"

]

}

]

}

}

Port-forward and login:

kubectl port-forward svc/prometheus-grafana -n monitoring 3000:80

Open your browser and navigate to http://localhost:3000. Use the default credentials to log in:

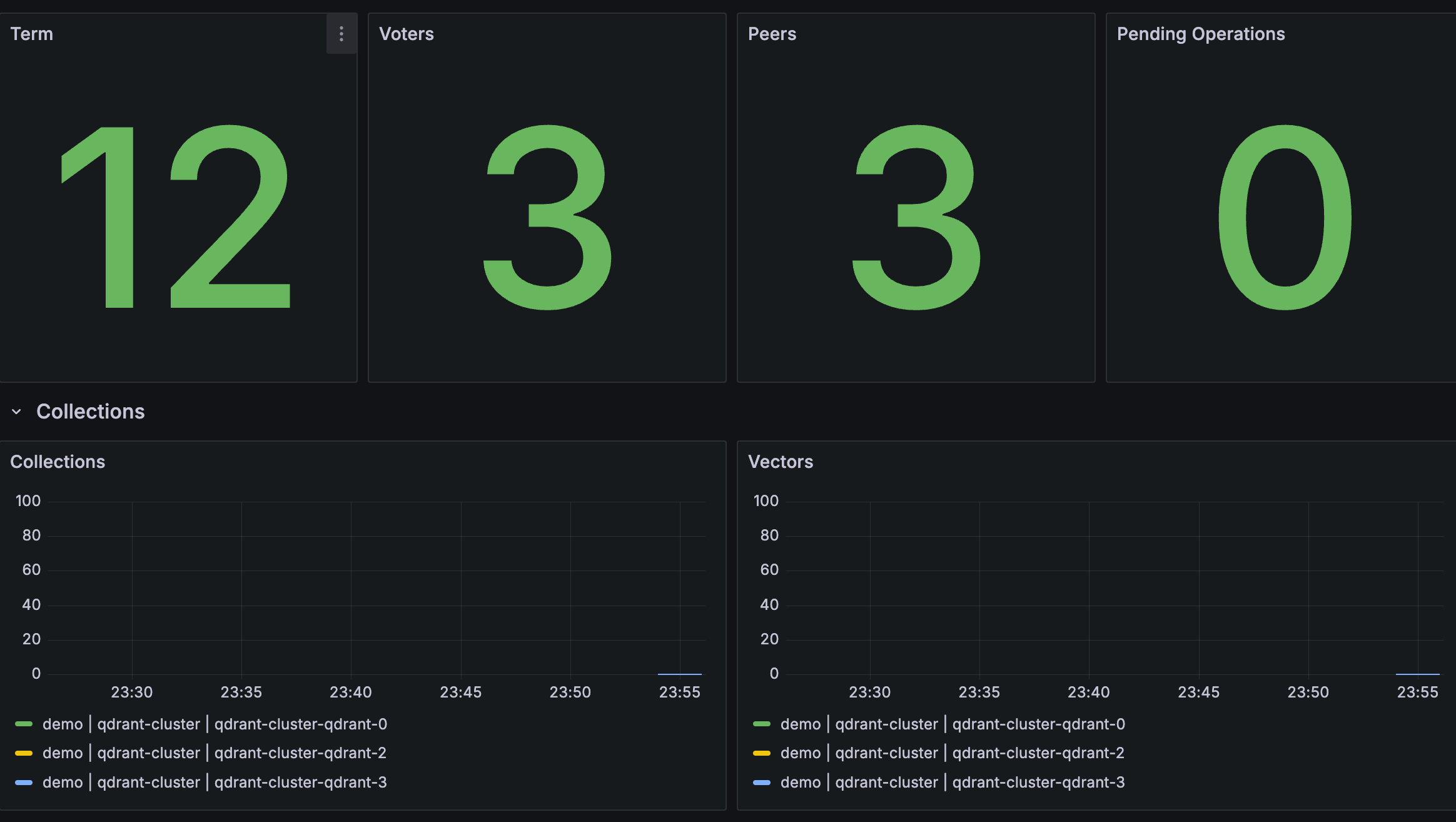

Import the KubeBlocks Qdrant dashboard:

https://raw.githubusercontent.com/apecloud/kubeblocks-addons/main/addons/qdrant/dashboards/qdrant-overview.json

To delete all the created resources, run the following commands:

kubectl delete cluster qdrant-cluster -n demo

kubectl delete ns demo

kubectl delete podmonitor qdrant-cluster-pod-monitor -n demo

In this tutorial, we set up observability for a Qdrant cluster in KubeBlocks using the Prometheus Operator.

By configuring a PodMonitor, we enabled Prometheus to scrape metrics from the Qdrant exporter.

Finally, we visualized these metrics in Grafana. This setup provides valuable insights for monitoring the health and performance of your Qdrant databases.